Raspberry pi OpenVINO with Intel Movidius

( Neural Compute Stick )

What is OpenVINO?

The Intel® Distribution of OpenVINO™ toolkit quickly deploys applications and solutions that emulate human vision. Based on Convolutional Neural Networks (CNN), the toolkit extends computer vision (CV) workloads across Intel® hardware, maximizing performance. The Intel Distribution of OpenVINO toolkit includes the Intel® Deep Learning Deployment Toolkit (Intel® DLDT).

OpenVINO™ toolkit, short for Open Visual Inference and Neural network Optimization toolkit, provides developers with improved neural network performance on a variety of Intel® processors and helps them further unlock cost-effective, real-time vision applications.The toolkit enables deep learning inference and easy heterogeneous execution across multiple Intel® platforms (CPU, Intel® Processor Graphics)—providing implementations across cloud architectures to edge devices. This open source distribution provides flexibility and availability to the developer community to innovate deep learning and AI solutions.

OpenVINO™ toolkit contains:

The Intel® Distribution of OpenVINO™ toolkit is also available with additional, proprietary support for Intel® FPGAs, Intel® Movidius™ Neural Compute Stick, Intel® Gaussian Mixture Model - Neural Network Accelerator (Intel® GMM-GNA) and provides optimized traditional computer vision libraries (OpenCV*, OpenVX*), and media encode/decode functions. To learn more and download this free commercial product, visit: https://software.intel.com/en-us/openvino-toolkit

What is Intel Movidius ( Neural Compute Stick )?

The Intel® Movidius™ Neural Compute Stick (NCS) is a tiny fanless deep learning device that you can use to learn AI programming at the edge. NCS is powered by the same low power high performance Intel Movidius Vision Processing Unit (VPU) that can be found in millions of smart security cameras, gesture controlled drones, industrial machine vision equipment, and more.

more detail

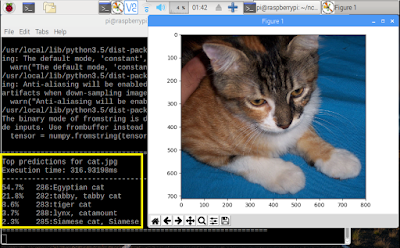

Samples Code Demo

Face Detection , Object Detection ( Object Detection C++ Sample SSD )Identify faces for a variety of uses, such as observing if passengers are in a vehicle or counting indoor pedestrian traffic. Combine it with a person detector to identify who is coming and going.

Pre-trained Face Detection model

https://download.01.org/openvinotoolkit/2018_R4/open_model_zoo/face-detection-adas-0001/FP16/face-detection-adas-0001.bin

https://download.01.org/openvinotoolkit/2018_R4/open_model_zoo/face-detection-adas-0001/FP16/face-detection-adas-0001.xml

To validate OpenCV* installation, you may try to run OpenCV's deep learning module with Inference Engine backend. Here is a Python* sample, which works with Face Detection model.

Face Detect Python Code on Github

Interactive Face Detection C++ Demo

Age & Gender Recognition

This neural network-based model provides age and gender estimates with enough accuracy to help you focus your marketing efforts.

Emotion Recognition

Identify neutral, happy, sad, surprised, and angry emotions.

This model shows the position of the head and provides guidance on what caught the subject's attention.

This demo showcases Object Detection task applied for face recognition using sequence of neural networks. Async API can improve overall frame-rate of the application, because rather than wait for inference to complete, the application can continue operating on the host while accelerator is busy. This demo executes four parallel infer requests for the Age/Gender Recognition, Head Pose Estimation, Emotions Recognition, and Facial Landmarks Detection networks that run simultaneously. You can use a set of the following pre-trained models with the demo:

face-detection-adas-0001, which is a primary detection network for finding facesage-gender-recognition-retail-0013, which is executed on top of the results of the first model and reports estimated age and gender for each detected facehead-pose-estimation-adas-0001, which is executed on top of the results of the first model and reports estimated head pose in Tait-Bryan anglesemotions-recognition-retail-0003, which is executed on top of the results of the first model and reports an emotion for each detected facefacial-landmarks-35-adas-0002, which is executed on top of the results of the first model and reports normed coordinates of estimated facial landmarks

For more information about the pre-trained models, refer to the https://github.com/opencv/open_model_zoo/blob/master/intel_models/index.md "Open Model Zoo" repository on GitHub*.

This code sample showcases vehicle detection, vehicle attributes, and license plate recognition.

The demo uses OpenCV to display the resulting frame with detections rendered as bounding boxes and text.

The demo uses OpenCV to display the resulting frame with detections rendered as bounding boxes and text.

This demo showcases Vehicle and License Plate Detection network followed by the Vehicle Attributes Recognition and License Plate Recognition networks applied on top of the detection results. You can use a set of the following pre-trained models with the demo:

vehicle-license-plate-detection-barrier-0106, which is a primary detection network to find the vehicles and license platesvehicle-attributes-recognition-barrier-0039, which is executed on top of the results from the first network and reports general vehicle attributes, for example, vehicle type (car/van/bus/track) and colorlicense-plate-recognition-barrier-0001, which is executed on top of the results from the first network and reports a string per recognized license plate

For more information about the pre-trained models, refer to the https://github.com/opencv/open_model_zoo/blob/master/intel_models/index.md "Open Model Zoo" repository on GitHub*.

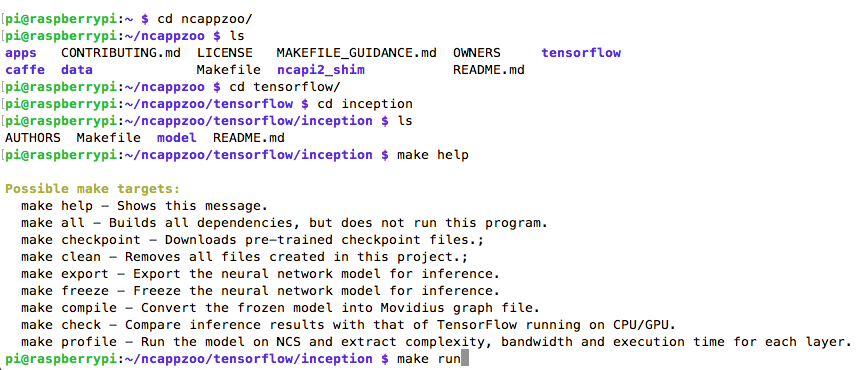

Install OpenVINO on Raspberry Pi

System Requirements

Hardware:

- Raspberry Pi* board with ARMv7-A CPU architecture

- 32GB microSD card

- One of Intel® Movidius™ Visual Processing Units (VPU):

Operating Systems:

- Raspbian* Stretch, 32-bit

Your installation is complete when these are all completed:

- Install the Intel® Distribution of OpenVINO™ toolkit.

- Set the environment variables.

- Add USB rules.

- Run the Object Detection Sample and the Face Detection Model (for OpenCV*) to validate your installation.

Reference

Install the Intel® Distribution of OpenVINO™ Toolkit for Raspbian* OShttps://software.intel.com/en-us/openvino-toolkit/documentation/pretrained-models

Inference Engine Samples

http://docs.openvinotoolkit.org/latest/_docs_IE_DG_Samples_Overview.html

OpenVINO, OpenCV, and Movidius NCS on the Raspberry Pi

https://www.pyimagesearch.com/2019/04/08/openvino-opencv-and-movidius-ncs-on-the-raspberry-pi/